People often ask me about ‘adversarial thinking’ — that somewhat amorphous concept that defines security folk with the uncanny ability to mentally model how things can break.

Whenever this conversation comes up, I’m reminded of an anecdote by Bruce Schneier:

Uncle Milton Industries has been selling ant farms to children since 1956. Some years ago, I remember opening one up with a friend. There were no actual ants included in the box. Instead, there was a card that you filled in with your address, and the company would mail you some ants. My friend expressed surprise that you could get ants sent to you in the mail.

I replied: “What’s really interesting is that these people will send a tube of live ants to anyone you tell them to.”

I had a similar epiphany in my childhood with barcodes in supermarkets. After learning how to read them (back then, my reference material was a random ‘zine on a long-defunct BBS), I immediately wrote a program to print my own.

My curiosity was to see how the scanners would react, but I quickly realized that you could swap a legitimate barcode for a product with a much cheaper price (like most innovative criminal enterprises, this has already been done).

‘Adversarial thinking’, the ‘security mindset’, whatever you call it, it’s apparent that some folks are naturally better at breaking things than others. The gulf is vast — professional red-teamers and bug hunters can immediately find glaring vulnerabilities in systems and processes that others have built and used for years without noticing them.

You can’t even ascribe the difference to some sort of training; most hackers never formally ‘learned’ this stuff.

Embracing adversarial thinking is not just a ‘good to have’, but a ‘must-have’. Without it being the foundation of your defense, you’re doomed to failure. This is far more than hiring a couple of pentesters every quarter, you have to have defenders who think this way. It’s more important than any technology you can buy, or process you can document.

Done right, it’s the single greatest way to improve your security.

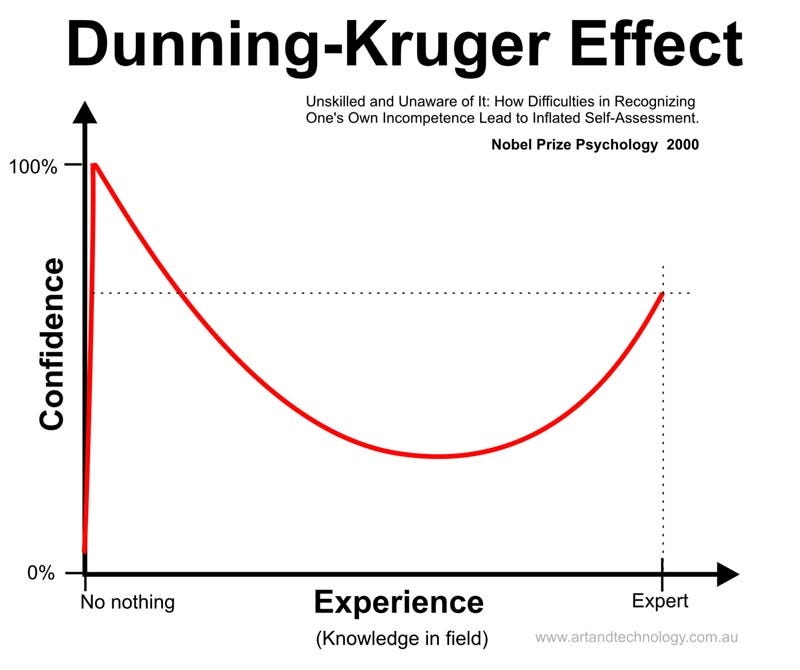

Dunning-Kruger and the Cybersecurity Defender

Unfortunately, the cybersecurity industry has built stark schisms between the types of people who can break systems, and those that build or defend them.

The difference is represented in our primary color nomenclature — The ‘red team’ versus the ‘blue team’. Many of the people playing team blue don’t come with the adversarial thinking mindset. They may have transitioned into security from an IT operations role, or just don’t have (or tap into) that way of looking at the world. The differences extend beyond color right into the vocabulary they use. Many blue-teams have the equivalent of elementary school security vocabulary.

Worryingly, they don’t ‘see it’, yet they believe they understand it. Their knowledge of how security systems break is superficial, and their confidence in the tenuous defenses they construct is high. They’re at the early phase of a form of security Dunning-Kruger Effect

The Dunning-Kruger effect, occurs where people fail to adequately assess their level of competence — or specifically, their incompetence — at a task and thus consider themselves much more competent than everyone else.

This lack of awareness is attributed to their lower level of competence robbing them of the ability to critically analyse their performance, leading to a significant overestimation of themselves.

The breakers, on the other hand, live, breathe, and sleep this stuff. They view even the physical world in terms of postally-delivered insects and in-store label replacement. It’s not a job, it’s a default world view.

For those amongst you who think like this, you’ll find yourself face-palming in frustration when you hear things like:

Nothing to worry about, we’ve blacklisted all the characters that cause SQL injection.

…

Nobody can break into this server, we’ve firewall-ed every port except HTTP!

…

You have to pen-test my environment only through the firewall, don’t spear-phish my staff, and only target this specific IP address. You must break in under 30 minutes, while standing on a donkey, with your dominant hand tied behind your back.

(The last one is from an actual engagement. Promise).

This schism leads to defenders complaining that red-teams have huge egos (well okay, many do), and all they do is break in, throw down a report, and ride into the sunset. The onus has been placed on offensive teams to learn to couch their language in order to have their findings accepted.

Hacker subculture values intellectual honesty over political correctness and forced authority figures, so you can imagine how well this tends to go with the typical red team personality who (often rightly) wants to call his defender counterpart a negligent <expletive>, but is constrained from doing so.

The Maker / Breaker Myth

As an aside, there’s another falsehood that must be addressed. Some people assume that breakers are only good at tearing things down. This fits nicely into the black and white, good v/s evil narrative that often accompanies hyped cybersecurity headlines in the news.

The reality couldn’t be further from the truth. Most people who excel in adversarial thinking are also great at making things.

This extends far beyond their Github repositories (though there’s plenty of evidence of creation there), they write, the woodwork, they build companies.

Can Adversarial Thinking Be Learned?

Yes.

Now that we’ve broken Betteridge’s law of headlines, the answer is more nuanced. It can be learned, but I don’t believe it can be taught.

For example, a neophyte quality assurance tester (a great place to find budding penetration testers) may come into the job like Neo in The Matrix; never really aware of their latent abilities until their awakening. Over time, they discover ‘the knack’, and before you know it, you have a version of IBM’s infamous “Black Team”.

This bodes badly for the legions of training programs that promise to remold you into some variant of an OCH (Officially Certified Hacker) in five days or your money back (not).

Those certifications either favor the lowest common denominator of tool-teaching over thought-application or if they do have strong practical testing, the people who do well likely already had the mindset in the first place. The course served to teach them the tradecraft, not the art.

There are notable exceptions, such as the excellent Pentester Academy, where the focus is foundational concepts over tools, and the delivery medium requires a personal passion that often (but not always) goes hand-in-hand with the security mindset.

In the spirit of helpful lists, here are some ways to tickle the adversarial thinking funny bone. If you find these fun, you may just ‘have it’:

- Deconstruct something outside of computer security

Look for the postal-mailed ants in the world around you. - Try lock-picking

Let’s see. An exercise in early-stage frustration requires dedication to master, succeeding is it’s own reward, requires black-box reverse engineering skills. Sounds very much like computer security. Look no further than the amazing Deviant Ollam’s books to start. - Play a Strategy Board Game

As cliched as it may sound, games like chess, Chinese checkers, and go have strong adversarial thinking precepts including ‘if this, then that’ thinking, and pattern identification.

Hiring Adversarial Thinkers

Since certifications are a bad metric for identifying the skill-set, analyzing experience is a better way to look for the right stuff.

This doesn’t mean hire the person who did 6 years of ‘VA/PT’ at $CONSULTING_COMPANY, instead, ask about how he or she got started with security (look for ant-mailer thinking), and transition into the war-stories of their hardest real-life and computer security hacks.

If they enthusiastically delve into the time they had to balance a nutmeg on a data-center door in order to prevent the fire alarm from sounding, you might have the right person.

Bonus Points: If they reveal confidential information like client names, you can stop right there — they may be good, but you don’t want someone who’s a talker.

If you’re hiring fresh off the boat, you can’t count on past experience, so a far less scientific process will have to suffice. Lateral thinking questions (‘list 10 ways to switch off a light bulb without touching the switch’) are alright, but can quickly end up being dubiously effective.

We’ve always favored giving prospects a really difficult practical challenge, well outside of the realm of their existing domain knowledge. The challenge has different levels, ranging from elementary to either unsolvable or with multiple correct answers. Look for those who:

- Take the time to learn the fundamental concepts of the problem

- Apply creative solutions, even if they are wrong (much of adversarial thinking involves extensive trial and error).

- Talk enthusiastically about the levels they could not solve and how they approached them (as opposed to those who try to downplay the failure).

Adversarial Thinking in Summary

- Adversarial thinking is an innate way of looking at the world, and security specifically. Hackers tend to have it.

- Embracing this form of thinking can revolutionize how you design your security, however, it can’t just be adopted, it must be fundamentally embedded in your security team’s psyche (I’m trying really hard not to use the overused, ‘baked in’).

- Many (with caveats) defenders lack this way of thinking, leading to a misplaced idea of resilience, illustrated by the Dunning-Kruger Effect, and manifested in genuine surprise when things get pwned.

- The benefits of red-teaming often suffer from the inability to bridge this personality divide between the ones assessing and the ones on the receiving end.

- It’s a myth that those that break cannot build.

- One can learn to think adversarially, but one can’t be taught how to. You can also train the capability, and make identifying it a part of your hiring process.

Caveats and Clarifications

- Excellent defenders and blue-teamers exist. Unfortunately, far most defenders haven’t made the leap. It’s our hope that acknowledging the adversarial thinking gap will help address that.

- The word ‘hacker’ is used loosely, with both its context in security, as well as the purer definition.

- Red-teamers can be somewhat excused for their egos. Most of their job involves dealing with people who fail at what they consider elementary. They’re also often part of high-performance teams, with healthy internal intellectual competition. That doesn’t excuse being arrogant — you can be a great pen-tester and still be a lousy security professional.

Continue Reading

Using deception to shield the insurance sector

Insurance companies are under siege from cyberattacks. We take a look at some of the key pieces of an insurer’s infrastructure the adversaries target and how you can use deception to build active defenses.By Sudarshan Pisupati7 Ways to Fail At Implementing Deception Technology

Since there’s precious little information on how security teams can make deception implementations successful (some folks like to keep it a secret), there’s plenty that can go wrong. Here are 7 ways to completely botch your deployment of deception technology.By Smokescreen Team10 Questions To Ask Deception Technology Vendors

Deception technology is a major buzzword today. In order for you to cut through the marketing hype, here’s a set of evaluation questions that will help you better understand disparate deception offerings and identify vendors that know what they’re doing.By Smokescreen Team

- Detect zero-days, APTs, and insider threats

- 10x the detection capabilities with 1/2 the team

- Get started in minutes, fully functional in hours